1 | import numpy as np |

2 | import tensorflow as tf |

3 | import matplotlib.pyplot as plt |

4 |

|

5 | ''' |

6 | TensorFlow: 第一个神经网络 |

7 | 输入层(1个神经元)-> 隐藏层(10个神经元)-> 输出层(1个神经元) |

8 | ''' |

9 |

|

10 |

|

11 |

|

12 | def add_layer(inputs, in_size, out_size, activation_function=None): |

13 | Weights = tf.Variable(tf.random_normal([in_size, out_size])) |

14 | biases = tf.Variable(tf.zeros([1, out_size]) + 0.1) |

15 | Wx_plus_b = tf.matmul(inputs, Weights) + biases |

16 | if activation_function is None: |

17 | outputs = Wx_plus_b |

18 | else: |

19 | outputs = activation_function(Wx_plus_b) |

20 | return outputs |

21 |

|

22 |

|

23 |

|

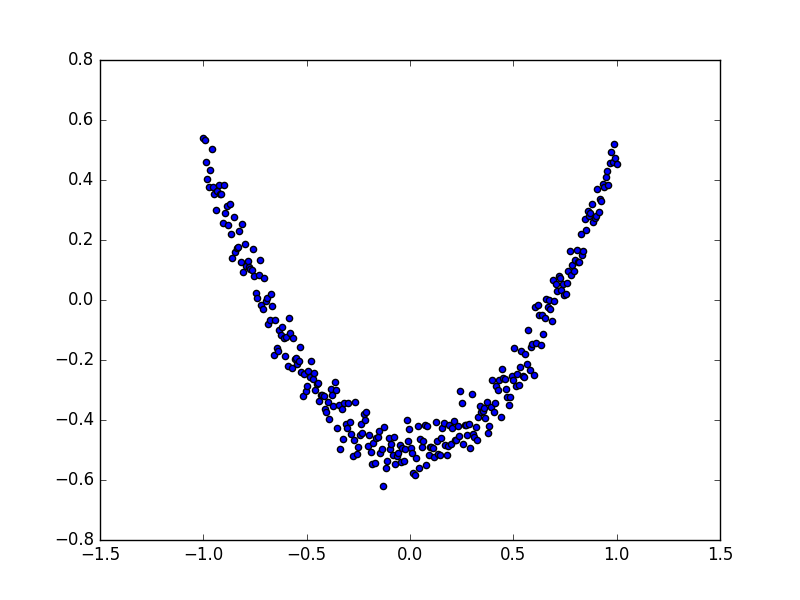

24 | x_data = np.linspace(-1, 1, 300, dtype=np.float32)[:, np.newaxis] |

25 | noise = np.random.normal(0, 0.05, x_data.shape).astype(np.float32) |

26 | y_data = np.square(x_data) - 0.5 + noise |

27 |

|

28 | xs = tf.placeholder(tf.float32, [None, 1]) |

29 | ys = tf.placeholder(tf.float32, [None, 1]) |

30 |

|

31 |

|

32 | l1 = add_layer(xs, 1, 10, activation_function=tf.nn.relu) |

33 | prediction = add_layer(l1, 10, 1, activation_function=None) |

34 | loss = tf.reduce_mean(tf.reduce_sum(tf.square(ys - prediction), reduction_indices=[1])) |

35 |

|

36 | train_step = tf.train.GradientDescentOptimizer(0.1).minimize(loss) |

37 |

|

38 | init = tf.global_variables_initializer() |

39 | sess = tf.Session() |

40 | sess.run(init) |

41 |

|

42 |

|

43 | fig = plt.figure() |

44 | ax = fig.add_subplot(1, 1, 1) |

45 | ax.scatter(x_data, y_data) |

46 | plt.ion() |

47 | plt.show() |

48 |

|

49 |

|

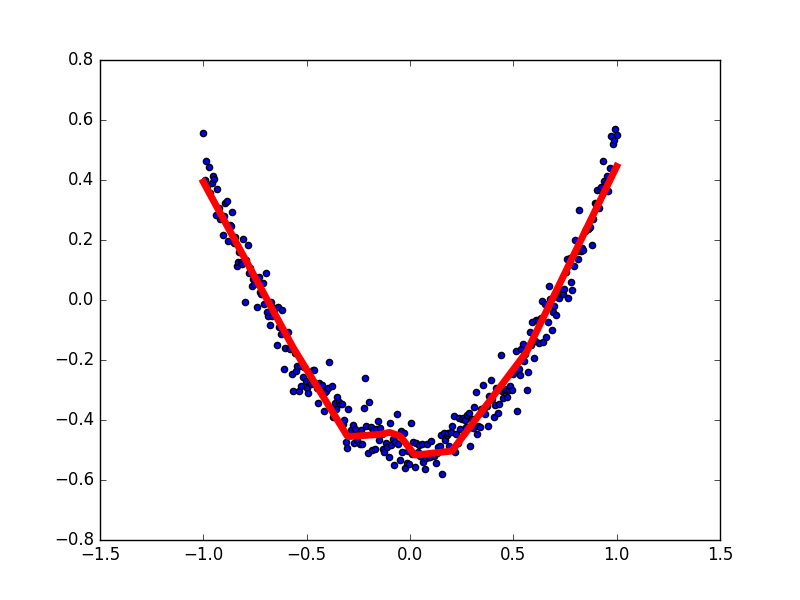

50 | for i in range(1000): |

51 | |

52 | sess.run(train_step, feed_dict={xs: x_data, ys: y_data}) |

53 | if i % 50 == 0: |

54 | print(sess.run(loss, feed_dict={xs: x_data, ys: y_data})) |

55 | try: |

56 | ax.lines.remove(lines[0]) |

57 | except Exception: |

58 | pass |

59 | prediction_value = sess.run(prediction, feed_dict={xs: x_data}) |

60 | |

61 | lines = ax.plot(x_data, prediction_value, 'r-', lw=5) |

62 | plt.pause(0.1) |